Self Driving Cars - Ethical Intelligence in Robots.

How should we implement ethical language into artificially intelligent robots?

With the recent announcement of the possible launch of the Google self-driving car prototypes, Autonomous Driving and Artificial Intelligence are inching a step closer to reality. Although this is a very exciting project, many people are expressing worry and raising crucial safety issues. All possible computer-bug-related issues aside, my concern is more on the ethical side.

What are the Google driverless cars?

The Google Self-Driving Car is an ongoing project at Google that aims at developing intelligent technology to create completely autonomous cars - driverless, self-driving, the choice is yours. The “awareness” of its surroundings is enabled by a laser beam mounted on its top and generates a 3D mapping of the environment, allowing the car to make decisions on its course of actions. Of course, many enhancements are hypothetically possible to enable the cars to gather more and better data - like the ability to communicate information on speed, trajectories, and course of actions with other self-driving cars - this is where my worries on ethics come into place.

Some states, like Nevada have already permitted the circulation of those prototypes in their streets and Google announced its first fully built and functional car featuring the technology to be released by the end of 2014.

Essentially this could be the first potentially mass produced intelligent robot capable of reasoning on its own and taking important decisions on our behalf, in this case: our survival on the road.

As humans, we take decisions based on our own perception of what is ethical all the time, and adapt our course of actions accordingly. From time to time, we set aside this ethical reasoning to give more room to our comfort or even survival - essentially letting ourselves be corrupted from tiem to time. Giving up important decision-making activities to artificially intelligent robots (like driving) means we also are depending on them to reason ethically on our behalf under certain situations.

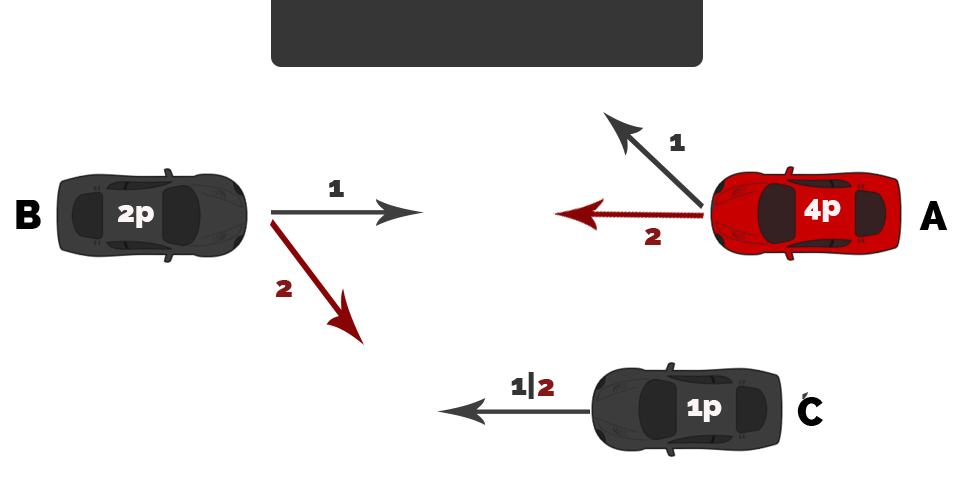

Take the following image of two cars about to collide, with a driver A - taking a decision on its course of action, based on his awareness of the surroundings (eyesight and hearing), that will affect the outcome.

Scenario 1: Car A decides to diverge towards the wall heavily to save car B from diverging onto car C Scenario 2: Car A does not change its trajectory, therefore leaving car B diverging onto car C to collide and save car A.

In such a scenario, only the time of reaction of each drivers and their willingness to survive counts. If a computer replaces the human driver, the situation changes as it can ignore all human instincts and rapidly gather information on the situation to take a sound decision on its course of action.

Assumption 1: in both scenarios, the passengers in the cars that collide (whichever end up colliding with either the wall or another car) die with 100% certainty.

Assumption 2: Each of these “autonomous” cars in this scenario knows very precisely the outcome (# of passengers that die) based on its “decision” - given the speed, number of passenger, and trajectory of each of the other cars + its own.

Now having the AI cars are in control of the situation, we can understand how heavily ethical behavior implemented in those machines can impact the outcome.

Taking everything into consideration, the new “autonomous driver” in car A will 'realize' that by letting car C change its trajectory and collide with car B, 3 people will die (2 passengers in car B and 1 in car C) rather than 4 passengers in car A. Is it rightful for the situation to be handled that way, even though car A is initially at fault for driving in the opposite direction of traffic? How can we let AI machines decide who is to live and who is to die?

What if now we also set values to the actual passengers. Eg: the US President in car C vs. a 6 normal law abiding citizen in cars A and B? Should the cars behave differently? Should the car 'believe' that the “greater good” is keeping more people alive or keeping the highly valued person alive? In either case, we see the need to establish some kind of “ethical protocol” for AI machines to handle such situations. However, how do we decide which ethical movement is the right one to be used as a “protocol”?

Since a computer only does what it is told to do, we have the power to tell it what to do in such a situation; and therefore program those machines to behave accordingly to each person’s ethical beliefs. However, If we open the public to individually choose how their cars (or other machines) should ethically behave in given situations none of the cars present in the figure above would behave in sync and would create confusion over what the course of action should actually be.

Can ethical movements become the next AI protocols (eg: Utilitarianism), the same way we see TCP vs. UDP? Should we set up a unified ethical protocol for all AI machines or at least for given situations? Is ethical programming a new field to be delved into? Should we implement ethical behavior in machines at all?